Configuration and Building¶

This section provides information about configuring and building the Axom software after you have cloned the repository. The main steps for using Axom are:

- Configure, build, and install third-party libraries (TPLs) on which Axom depends.

- Build and install Axom component libraries that you wish to use.

- Build and link your application with the Axom installation.

Depending on how your team uses Axom, some of these steps, such as installing the Axom TPLs and Axom itself, may need to be done only once. These installations can be shared across the team.

Requirements, Dependencies, and Supported Compilers¶

Basic requirements:¶

- C++ Compiler

- CMake

- Fortran Compiler (optional)

Compilers we support (listed with minimum supported version):¶

- Clang 4.0.0

- GCC 4.9.3

- IBM XL 13

- Intel 18

- Microsoft Visual Studio 2015

- Microsoft Visual Studio 2015 with the Intel toolchain

Please see the <axom_src>/scripts/uberenv/spack_configs/*/compilers.yaml

for an up to date list of the supported compilers for each platform.

External Dependencies:¶

Axom’s dependencies come in two flavors: Library dependencies contain code that axom must link against, while tool dependencies are executables that we use as part of our development process, e.g. to generate documentation and format our code. Unless otherwise marked, the dependencies are optional.

Library dependencies¶

| Library | Dependent Components | Build system variable |

|---|---|---|

| Conduit | Sidre (required) | CONDUIT_DIR |

| HDF5 | Sidre (optional) | HDF5_DIR |

| Lua | Inlet (optional) | LUA_DIR |

| MFEM | Quest (optional) | MFEM_DIR |

| RAJA | Mint (optional) | RAJA_DIR |

| SCR | Sidre (optional) | SCR_DIR |

| Umpire | Core (optional) | UMPIRE_DIR |

Each library dependency has a corresponding build system variable

(with the suffix _DIR) to supply the path to the library’s installation directory.

For example, hdf5 has a corresponding variable HDF5_DIR.

Tool dependencies¶

| Tool | Purpose | Build system variable |

|---|---|---|

| clang-format | Code Style Checks | CLANGFORMAT_EXECUTABLE |

| CppCheck | Static C/C++ code analysis | CPPCHECK_EXECUTABLE |

| Doxygen | Source Code Docs | DOXYGEN_EXECUTABLE |

| Lcov | Code Coverage Reports | LCOV_EXECUTABLE |

| Shroud | Multi-language binding generation | SHROUD_EXECUTABLE |

| Sphinx | User Docs | SPHINX_EXECUTABLE |

Each tool has a corresponding build system variable (with the suffix _EXECUTABLE)

to supply the tool’s executable path. For example, sphinx has a corresponding build

system variable SPHINX_EXECUTABLE.

Note

To get a full list of all dependencies of Axom’s dependencies in an uberenv

build of our TPLs, please go to the TPL root directory and

run the following spack command ./spack/bin/spack spec uberenv-axom.

Building and Installing Third-party Libraries¶

We use the Spack Package Manager to manage and build TPL dependencies for Axom. The Spack process works on Linux and macOS systems. Axom does not currently have a tool to automatically build dependencies for Windows systems.

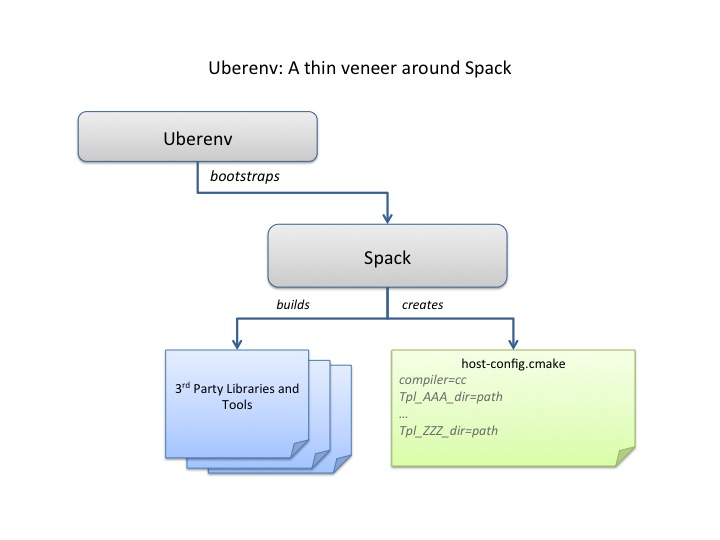

To make the TPL process easier (you don’t really need to learn much about Spack) and

automatic, we drive it with a python script called uberenv.py, which is located in the

scripts/uberenv directory. Running this script does several things:

- Clones the Spack repo from GitHub and checks out a specific version that we have tested.

- Configures Spack compiler sets, adds custom package build rules and sets any options specific to Axom.

- Invokes Spack to build a complete set of TPLs for each configuration and generates a host-config file that captures all details of the configuration and build dependencies.

The figure illustrates what the script does.

The uberenv script is run from Axom’s top-level directory like this:

$ python ./scripts/uberenv/uberenv.py --prefix {install path} \

--spec spec \

[ --mirror {mirror path} ]

For more details about uberenv.py and the options it supports,

see the uberenv docs

You can also see examples of how Spack spec names are passed to uberenv.py

in the python scripts we use to build TPLs for the Axom development team on

LC platforms at LLNL. These scripts are located in the directory

scripts/uberenv/llnl_install_scripts.

Building and Installing Axom¶

This section provides essential instructions for building the code.

Axom uses BLT, a CMake-based system, to configure and build the code. There are two ways to configure Axom:

- Using a helper script

config-build.py- Directly invoke CMake from the command line.

Either way, we typically pass in many of the configuration options and variables using platform-specific host-config files.

Host-config files¶

Host-config files help make Axom’s configuration process more automatic and reproducible. A host-config file captures all build configuration information used for the build such as compiler version and options, paths to all TPLs, etc. When passed to CMake, a host-config file initializes the CMake cache with the configuration specified in the file.

We noted in the previous section that the uberenv script generates a ‘host-config’ file for each item in the Spack spec list given to it. These files are generated by spack in the directory where the TPLs were installed. The name of each file contains information about the platform and spec.

Python helper script¶

The easiest way to configure the code for compilation is to use the

config-build.py python script located in Axom’s base directory;

e.g.,:

$ ./config-build.py -hc {host-config file name}

This script requires that you pass it a host-config file. The script runs CMake and passes it the host-config. See Host-config files for more information.

Running the script, as in the example above, will create two directories to hold the build and install contents for the platform and compiler specified in the name of the host-config file.

To build the code and install the header files, libraries, and documentation

in the install directory, go into the build directory and run make; e.g.,:

$ cd {build directory}

$ make

$ make install

Caution

When building on LC systems, please don’t compile on login nodes.

Tip

Most make targets can be run in parallel by supplying the ‘-j’ flag

along with the number of threads to use.

E.g. $ make -j8 runs make using 8 threads.

The python helper script accepts other arguments that allow you to specify explicitly the build and install paths and build type. Following CMake conventions, we support three build types: ‘Release’, ‘RelWithDebInfo’, and ‘Debug’. To see the script options, run the script without any arguments; i.e.,:

$ ./config-build.py

You can also pass extra CMake configuration variables through the script; e.g.,:

$ ./config-build.py -hc {host-config file name} \

-DBUILD_SHARED_LIBS=ON \

-DENABLE_FORTRAN=OFF

This will configure cmake to build shared libraries and disable fortran for the generated configuration.

Run CMake directly¶

You can also configure the code by running CMake directly and passing it the appropriate arguments. For example, to configure, build and install a release build with the gcc compiler, you could pass a host-config file to CMake:

$ mkdir build-gcc-release

$ cd build-gcc-release

$ cmake -C {host config file for gcc compiler} \

-DCMAKE_BUILD_TYPE=Release \

-DCMAKE_INSTALL_PREFIX=../install-gcc-release \

../src/

$ make

$ make install

Alternatively, you could forego the host-config file entirely and pass all the arguments you need, including paths to third-party libraries, directly to CMake; for example:

$ mkdir build-gcc-release

$ cd build-gcc-release

$ cmake -DCMAKE_C_COMPILER={path to gcc compiler} \

-DCMAKE_CXX_COMPILER={path to g++ compiler} \

-DCMAKE_BUILD_TYPE=Release \

-DCMAKE_INSTALL_PREFIX=../install-gcc-release \

-DCONDUIT_DIR={path/to/conduit/install} \

{many other args} \

../src/

$ make

$ make install

CMake configuration options¶

Here are the key build system options in Axom.

| OPTION | Default | Description |

|---|---|---|

| AXOM_ENABLE_ALL_COMPONENTS | ON | Enable all components by default |

| AXOM_ENABLE_<FOO> | ON | Enables the axom component named ‘foo’ (e.g. AXOM_ENABLE_SIDRE) for the sidre component |

| AXOM_ENABLE_DOCS | ON | Builds documentation |

| AXOM_ENABLE_EXAMPLES | ON | Builds examples |

| AXOM_ENABLE_TESTS | ON | Builds unit tests |

| BUILD_SHARED_LIBS | OFF | Build shared libraries. Default is Static libraries |

| ENABLE_ALL_WARNINGS | ON | Enable extra compiler warnings in all build targets |

| ENABLE_BENCHMARKS | OFF | Enable google benchmark |

| ENABLE_CODECOV | ON | Enable code coverage via gcov |

| ENABLE_FORTRAN | ON | Enable Fortran compiler support |

| ENABLE_MPI | OFF | Enable MPI |

| ENABLE_OPENMP | OFF | Enable OpenMP |

| ENABLE_WARNINGS_AS_ERRORS | OFF | Compiler warnings treated as errors. |

If AXOM_ENABLE_ALL_COMPONENTS is OFF, you must explicitly enable the desired

components (other than ‘common’, which is always enabled).

See Axom software documentation for a list of Axom’s components and their dependencies.

Note

To configure the version of the C++ standard, you can supply one of the following values for BLT_CXX_STD: ‘c++11’ or ‘c++14’. Axom requires at least ‘c++11’, the default value.

See External Dependencies: for configuration variables to specify paths to Axom’s dependencies.

Make targets¶

Our system provides a variety of make targets to build individual Axom components, documentation, run tests, examples, etc. After running CMake (using either the python helper script or directly), you can see a listing of all available targets by passing ‘help’ to make; i.e.,:

$ make help

The name of each target should be sufficiently descriptive to indicate what the target does. For example, to run all tests and make sure the Axom components are built properly, execute the following command:

$ make test

Compiling and Linking with an Application¶

Please see Using Axom in Your Project for examples of how to use Axom in your project.